Artificial Intelligence Round-Up: February 2024

How AI tools are disrupting medicine, politics, coding, and the Internet itself

Dear Readers,

While AI and large language models have receded from the headlines a bit, at least relative to the high-stakes drama of OpenAI briefly firing Sam Altman last fall, there continues to be important developments from multiple companies. These products are rapidly evolving and we’re beginning to see how they have the potential to—in some cases already are—disrupt healthcare, the tech sector, elections, and even the Internet itself.

I hope you enjoy this curated selection of interesting news in AI in early 2024. As always, if you have questions or comments, leave them below or shoot me an email: allscience@substack.com

—Eric

(PS: If this email is truncated, you can view the whole thing on the web site)

AI scribes come to medicine

Anyone who has worked in healthcare knows that one of the biggest headaches is the mountain of documentation. As patients, many of us have been frustrated to be nominally in the same room as a doctor, yet they spend the entire time staring at their laptop screen, clicking boxes in their patient notes software, mumbling absentmindedly as they try to juggle administrative tasks with active listening, clinical reasoning, and empathy.

One old-school solution to this problem was dictation into audio recorders and having trained medical scribes fill out the paperwork. Later, electronic health records (EHR) came on the scene in the name of streamlining both medical care and data, only to increase the work load and frustration of providers (indeed, EHRs are a leading source of burnout for physicians). Then, to cope with all of that added burden, practices in human and veterinary medicine experimented with having scribes in the room.

A new commentary article in the New England Journal of Medicine discusses the newest frontier in this area: ambient AI scribes. It presents preliminary data on the implementation and early outcomes of ambient artificial intelligence (AI) scribes by The Permanente Medical Group (TPMG). Ambient AI scribes use machine learning to transcribe and summarize patient encounters in real-time, aiming to alleviate the documentation workload from clinicians and enhance patient care. In a rapid regional pilot assessment, TPMG enabled ambient AI technology for 10,000 physicians and staff, covering over 303,266 patient encounters across various specialties. The implementation process included comprehensive training, support, and ongoing monitoring, with positive feedback from both physicians and patients.

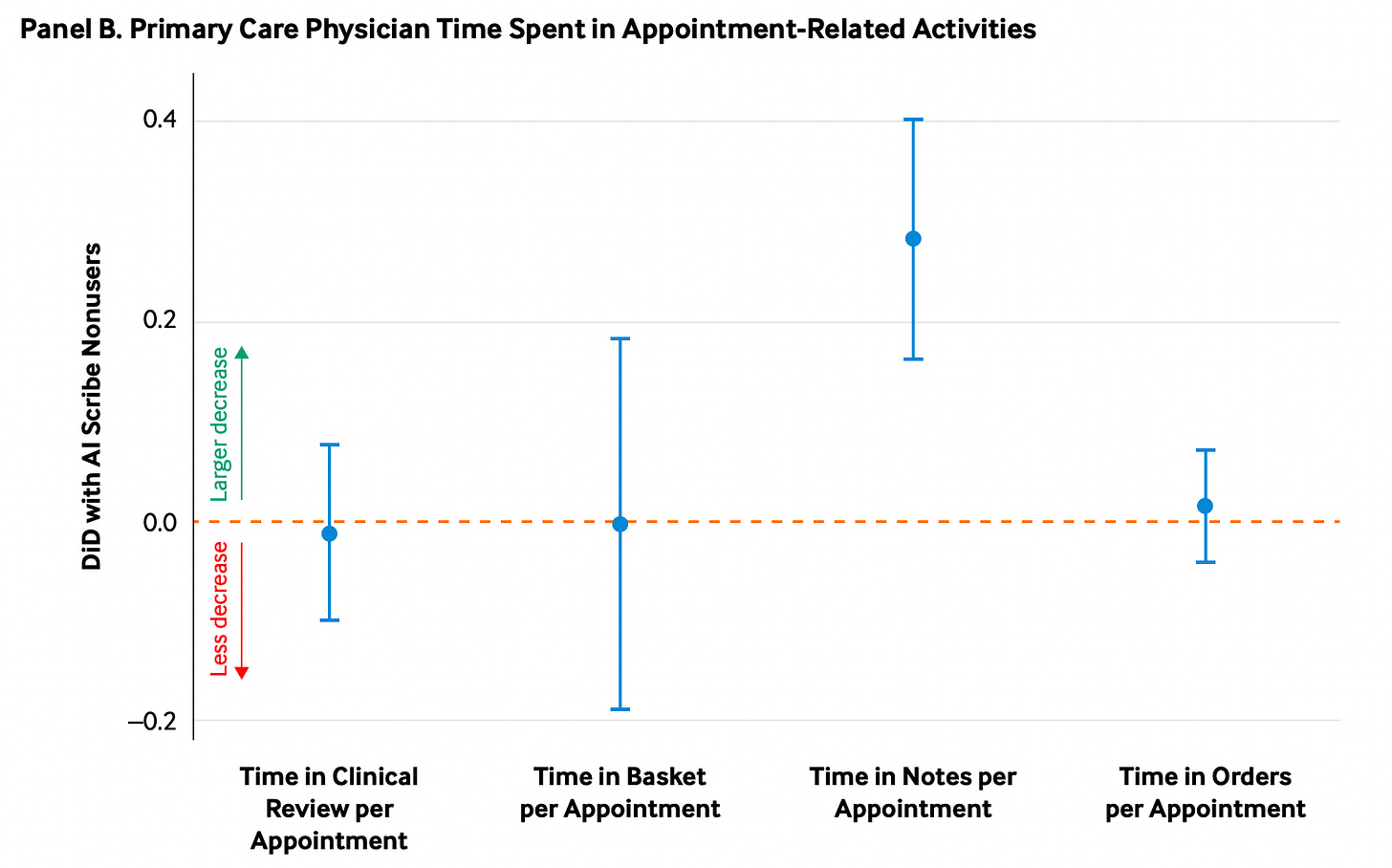

Physicians reported more meaningful interactions with patients and reduced clerical work, while early patient feedback highlighted improved physician interaction. Preliminary assessments show that ambient AI scribes produce high-quality documentation that requires minimal editing. Statistical analyses indicated a reduction in time spent writing and editing notes among AI users, but no change in appointment activities unrelated to documentation:

However, challenges such as integration with electronic health records (EHRs), language limitations, and the need for continuous improvement and evaluation of the technology were noted. The commentary underscores the potential of ambient AI scribes to improve clinical workflows, documentation quality, and the overall healthcare delivery experience, albeit with attention to ongoing optimization and evaluation.

All Science Great & Small is a reader-supported guide to veterinary medicine, science, and technology. Both free and paid subscriptions are available. If you want to support my work, the best way is by taking out a paid subscription 👇

Does AI boost productivity at the cost of quality?

The previous study shows a lot of promise for AI improving efficiency at work, but this software is not all sunshine and roses. Shifting from healthcare to tech, a recent white paper from the computer programming repository GitHub, titled “Coding on Copilot: 2023 Data Shows Downward Pressure on Code Quality” investigated the impact of Large Language Models (LLMs) like GitHub Copilot on programming practices. The big, splashy finding was that developers write code 55% faster with Copilot:

It also suggests, as other AI studies have found, that junior coders preferentially use and/or benefit from these tools:

Despite these productivity gains, the paper highlights concerns about code quality. The study, based on 153 million lines of code, finds increased code churn and a rise in added and copy/pasted code, which suggests a trend towards lower maintainability and potential for more fragmented code contributions, such as:

“1. Being inundated with suggestions for added code, but never suggestions for updating, moving, or deleting code. This is a user interface limitation of the text-based environments where code authoring occurs.

2. Time required to evaluate code suggestions can become costly. Especially when the developer works in an environment with multiple, competing auto-suggest mechanisms (this includes the popular JetBrains IDEs [11])

3. Code suggestion is not optimized by the same incentives as code maintainers. Code suggestion algorithms are incentivized to propose suggestions most likely to be accepted. Code maintainers are incentivized to minimize the amount of code that needs to be read (I.e., to understand how to adapt an existing system).”

This white paper includes a pithy tweet that sums up its whole thesis:

It concludes with recommendations for developers and teams using Copilot. These include investing time in code reviews, focusing on understanding the code suggested by Copilot, and incorporating best practices for maintainability and quality. In the end, I’m reminded of Jeff Goldblum’s famous line from Jurassic Park:

“Your scientists were so preoccupied with whether or not they could, they didn't stop to think if they should.”

Hopefully over time, we will become better at learning when and how to integrate these powerful tools.

Preparing for AI to disrupt elections

Unless you’ve been living under a rock, you’re no doubt aware that 2024 is a presidential election year in the US.

Ugh 🤬

NONE of us are excited about another 7+ months of toxic politics spilling into our news, social media feeds, and even entertainment (somehow politics has managed to infect such inoffensive cultural institutions as Taylor Swift and the NFL). We need to buckle up because the early signs are things are gonna get wild…

One of the biggest fears this year for experts who study democracy is the potential for AI to spread misinformation and disrupt elections. This is arguably already happening:

A fake, AI-generated audio clip of a politician in Slovakia discussing vote rigging was released during the 48-hour blackout before the election and may have swung the results

Authoritarian leader of Turkey Recep Erdogan released a campaign deep fake video falsely linking his opponent to a terrorist organization

In the US, robocalls using AI-voice cloning of President Biden targeted Democrats ahead of the New Hampshire primary, urging them to stay home and not vote.

Lastly, a recent study in PNAS evaluated how AI may play a role in covert propaganda campaigns in online spaces. Despite increased awareness and efforts by platforms to combat them, such campaigns continue to proliferate across websites, social media platforms, and encrypted messaging apps. This is particularly concerning given the advancement of language models, which are capable of generating original text, can streamline the production of persuasive content, and are becoming increasingly accessible.

To investigate the efficacy of AI-generated propaganda, the authors conducted an experiment comparing the persuasiveness of articles created by GPT-3 (note that this is a much older version than the current state of the art, GPT-4.5) with real-world covert propaganda articles sourced from campaigns attributed to state-backed actors. By analyzing survey responses from a diverse sample of US adults, they found that both types of content were highly persuasive to respondents, with GPT-3-generated articles slightly less so but still significantly impactful. Notably, the study highlights the potential for human involvement in the curation of AI-generated content to enhance its persuasiveness, suggesting that a combination of human oversight and AI technology could be leveraged to produce propaganda with even greater effectiveness.

“…a combination of human oversight and AI technology could be leveraged to produce propaganda with even greater effectiveness”

There are some potential solutions to the proliferation of AI-generated synthetic content, including watermarks and other tactics to verify authenticity as well as increased regulation. However, these are strategies that will take time to implement and require the cooperation of many players who may not have aligned interests. The best thing each of us can do this year is to be aware of this issue, try to get information from reliable sources, and fact-check any claims that seem outlandish or inflammatory before sharing to our networks.

AI-search upends the economics of the Web

Finally, you may have noticed Google is now integrating generative AI summaries for their searches:

Their top competitor, Microsoft Bing, has already had this functionality for a while. Newer start-ups like Perplexity and Arc AI take this a step further and simply browse the web for you and provide a coherent synopsis with links, images, and more. From a user standpoint, this is actually kind of cool. Imagine having a TL;DR summary for everything online!

On the other hand, anyone who makes money as a content creator or journalist sees the problem here immediately: If you no longer have to visit websites or apps to get the original content, that means a massive drop-off in page views. Nobody seeing pop-up or banner ads. No more affiliate links. In essence, it breaks the business model of the modern internet. There is a real concern that if everything online simply becomes raw material to be ingested in the maw of BigAI and the original sources don’t make any money, they will go out of business.

Newspapers in particular have long-subsidized costly but valuable loss-leaders like investigative reporting with ad dollars and people who subscribe for the sports section, crosswords, lifestyle pages, etc. These organizations have scrambled to adopt new business models with varying success. Subscription revenue may be hailed as the way of the future, although it is an open question how successful that will be longterm as every media company moves to charging those fees. Large, well-funded legacy institutions like the New York Times will likely be fine, and may even thrive as competitors go out of business. And small outlets, like Substack writers and creators on Patreon may make a decent living. The folks who will very likely not be fine are those medium-sized outlets that are already folding left and right. Local newspapers. Pitchfork. Jezebel. Countless others.

To hear more about this, the excellent NYT technology podcast Hard Fork recently discussed this issue and asked some tough questions of the founder of Perplexity. It’s a great interview and well worth your time:

Excellent roundup of some fast moving topics.