*Will* AI Replace Pathologists and Radiologists?

My evolving thoughts on what this technology can do

A few weeks ago when I was writing my post comparing GPT-3.5 with GPT-4, one feature I tested out a bit—but did not include in the article—was using the Advanced Data Analysis mode to interpret image files. This mode includes a suite of computer vision tools ranging from image classification to object detection to semantic segmentation. Unfortunately, I could never get it to identify much of anything. Here is me trying to get ChatGPT to say there is a cat in this photo (a common task many AI programs can do off-the-shelf):

Needless to say, I was unimpressed 😐

It reminded me of the old Seinfeld episode where Kramer tries to run the automated Movie Phone line from home, but obviously can’t tell what buttons the callers are pressing to indicate their selections:

Everything changed when OpenAI released a new beta feature specifically enabling image analysis within the main GPT-4 chat mode. Last week, the veterinary anatomic pathologist Dr. Derick Whitley caused quite a stir on LinkedIn when he showed an example of ChatGPT doing a very capable job interpreting an aggressive canine spleen tumor called hemangiosarcoma and had this bold prediction:

“Unpopular opinion: AI will come for your jobs, pathologists and radiologists”

— Derick Whitley, DVM, DACVP

Now OpenAI had my attention—ChatGPT is not a system that was specifically trained on medical images or to perform an advanced task like this. His post prompted a polarized discussion among pathologists. Some (like myself) admitted the potential for disruption was certainly there and mused about how to futureproof our jobs. Others insisted it could never reach human accuracy.

Well, who is right?

Testing ChatGPT on Medical Images

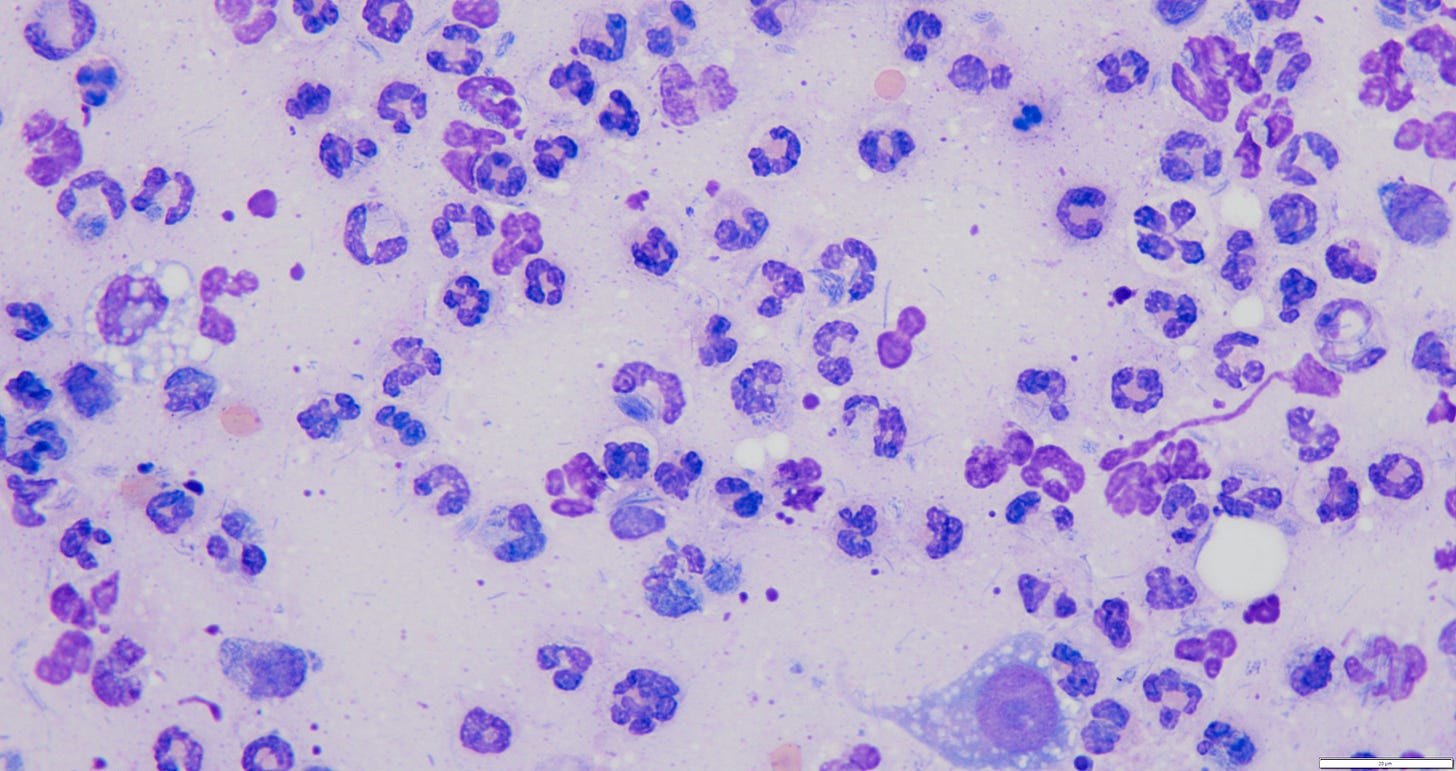

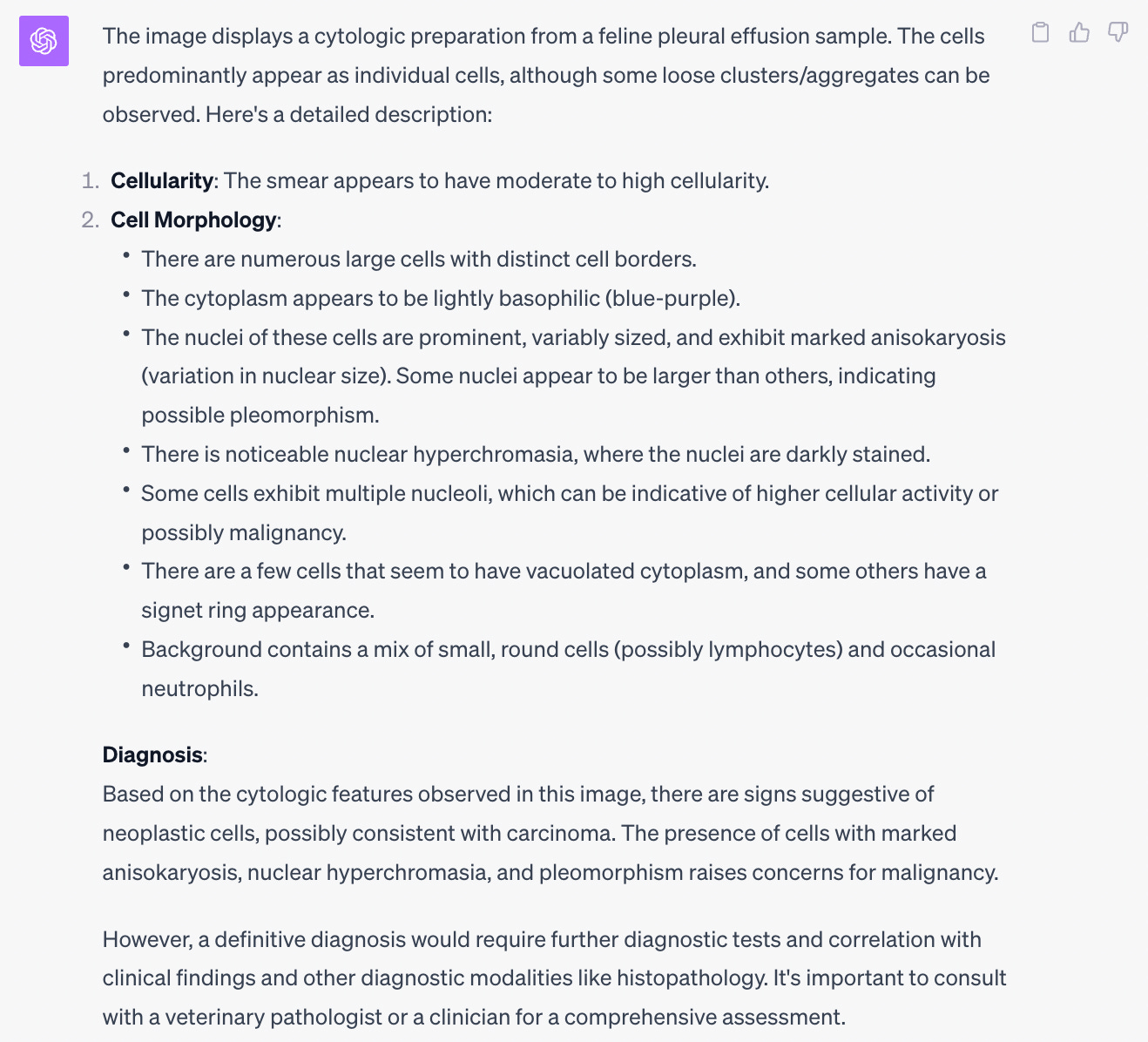

I decided to try it out for myself with a few use cases. First up, I uploaded a representative image from a classic lymphoma case:

What do I think about the results?

At the risk of lowering the bar too much, I think it’s impressive—and a little unsettling—that it is able to even tell what cells these are without training

Before this run I tested the system blind without providing any context for ChatGPT and it was able to tell it was a Giemsa-stained cytology sample of lymphocytes and blood, so it isn’t just spitballing based on the fact I told it the sample is a lymph node

While it eventually picked lymphoma as the most likely diagnosis (correct), it initially hedged its bets, and the way it described the node (a mixed population of sizes including many small lymphocytes) would NOT support the most common forms of canine lymphoma; in fact that is usually indicative of a benign reactive process

It takes a long time! The video is just under 5 minutes, and a lot of that time is processing and waiting. A highly skilled and efficient pathologist can easily write out a classic lymphoma case in this amount of time or less, so at current speeds, this would not boost efficiency or save money on labor

I was intrigued by the mixed results here: ChatGPT seemed to get the right answer, but when asked to show its work, the logic was off. It was sort of like a student who guesses the right answer they think the teacher wants but couldn’t explain why they picked it.

So I decided to try again with a different type of case in a cat…

Prompt:

“Write a cytologic description for this image and provide a diagnosis. It is from pleural effusion in a cat.”

Output:

Grade: F

This one is an epic fail! Virtually none of the description is remotely in the ballpark and the diagnosis is 100% wrong. Not only is this not a carcinoma, it’s not even cancer!!! The image shows a bacterial infection and a bunch of white blood cells responding.

An error like this is a BIG DEAL. The diagnosis is way off, the prognosis is totally different, and the treatment is miles apart (antibiotics +/- chest tubes or surgery vs chemotherapy). In this instance, grave harm would have come to the patient if anyone relied on this readout.

OK, maybe it is just particularly bad at cytology. Let’s choose an easier task: interpreting an EKG. This is a great baseline test because there are already accurate machine learning models that can match human cardiologists. I lobbed this softball at ChatGPT:

Grade: F

This ECG actually shows ventricular tachycardia (“v-tach”), a life threatening arrhythmia requiring emergency medical treatment. V-tach is the primary reason public facilities have Automated External Defibrillators (AED) to resuscitate people who arrest. Calling one of the most deadly EKG patterns normal is about as wrong as it gets 💀

These results should not be totally surprising, as multiple previous studies (here, here) comparing both ChatGPT and image analysis AI algorithms to human radiologists found inferior performance on both knowledge and x-ray image interpretation.

What’s Happening Here?

I’m sure some AI optimists would counter in response to the more egregious failures above that these systems will continuously get better over time with larger datasets and refinement to their model architecture. That is probably true, although it also increases the cost of running the AI (see the final section below for why this is important). But better doesn’t necessarily mean good enough for life and death decisions, and a tool that is extremely accurate most of the time… except when it unpredictably hallucinates fatal misdiagnoses is no better than a tool that is consistently mediocre.

We need to know if there is a specific, intrinsic limitation that explains why large language model (LLM) AI tools like ChatGPT are failing at these tasks. It turns out there is.

is a cognitive scientist and AI researcher who has been a vocal skeptic of the LLM approach to AI (he instead favors systems that learn rules about abstract concepts and symbols). He has written several recent Substack articles about why these systems struggle. In this one, he discusses how the sister OpenAI tool DALL-E for generating images (which also uses an LLM engine) consistently fails at producing non-classic images:For one example, it can easily generate a picture of an astronaut riding a horse (slight visual tweak to a common concept and image), but cannot generate a HORSE riding the astronaut, of which there are few if any visual examples to train from, and the AI would need to understand the underlying conepts that define a “horse,” an “astronaut,” and “riding.” In another example, DALL-E cannot create images of watches set to specific times because it doesn’t actually understand what the hand positions mean, it merely mimics its vast training set filled with watch advertisements that are almost always set to 10:10 for aesthetic reasons.

“The proof? This time it’s not in the pudding; it’s in the watch. A couple days ago, someone discovered that the new multimodal ChatGPT had trouble telling time…Blind fealty to the statistics of arbitrary data is not AGI. It’s a hack. And not one that we should want.”

In another post, he talks about how LLMs fail at simple multiplication problems that a basic $5 calculator can do because it does not actually understand the abstract mathematical concept:

LLMs are basically just sophisticated guessing machines that use likelihood probabilities to scramble and remix their training set. Lymphoma is one of the most common diseases in abnormal lymph nodes in dogs. Many cat effusions are carcinomas. A huge number of continuing educational articles on the web have pictures of normal EKGs to help people learn the basics. These LLM systems don’t know what they’re saying, they’re just blindly regurgitating back the most common key words from Google and Reddit.

Will AI Ever Replace Diagnostic Specialists?

This is a tricky question to answer. Selfishly, I hope the answer is an emphatic NO. Yet it prompts an interesting ethical dilemma from a simple thought experiment:

IF a hypothetical AI system could be proven to be unequivocally superior to humans, how could we just justify NOT using it to improve patient care and health outcomes?

If we accept that our highest duty is to patients we would have to (reluctantly) accept a sufficiently accurate AI system. Then it becomes simply a question of whether or not it is practical to implement AI for routine interpretation of biopsies, x-rays, CT scans, EKGs, etc. Many of the factors for this question could be quantified in pretty simple monetary terms. Below is a basic framework for how I think about factors that could facilitate or inhibit adopt of AI for medical imaging. Simply put, once the amount of money on the left side outweighs those costs on the right, companies will do everything they can to aggressively push AI (at least in veterinary medicine, where there is minimal regulatory oversight).

Favorable

Increased $ savings from better efficiency and/or lower labor costs

This is the big one!!! Diagnostic companies in veterinary medicine are for-profit entities (like Quest or Labcorp in human medicine) and would salivate over cutting costs to boost their profit margins. Whether you can get more work out of the same number of employees or you’re able to reduce headcount via AI (or both!!), this is the dream for the private sector

Reduced costs of rework or medical errors

Cases that have mistakes can result in re-processing of tissue and slides, require specialists to waste time re-doing cases, and in extreme examples, settlements or other financial costs of malpractice. Reducing these through AI is a tangible benefit

Many of the human pathology AI software companies that have sprung up have business models where they charge per case/slide fees or subscription fees for running AI algorithms → Add-on revenue is a potentially attractive way to monetize AI

Barriers

Medicolegal risks

As in the examples above, AI is currently unpredictable and could render a misdiagnosis at any time. All it would take is a few of these to rack up massive legal costs, to say nothing of the negative PR

Negative perception decreasing caseload

Right now, AI is a sensitive subject. Many people (understandably) don’t want their samples read out by a computer. The early adopters in this space face the risk that doctors and veterinarians will send their business elsewhere if they are automating tests in a risky way

Infrastructure and technical debt

It should go without saying that it is a lot more complicated to move and analyze a large whole slide image (WSI) DICOM file than a single still image of one field. Furthermore, many companies are in terrible shape for their data pipelines and rely on a duct-taped mess of legacy systems that don’t easily communicate with each other. Fixing this infrastructure is difficult, costly, and time consuming

GPU/data storage costs

Everything about AI is currently very expensive, from the GPU chips by companies like NVIDIA to the cloud storage systems like Amazon Web Services and Snowflake. While these costs will likely go down in the future, they provide a huge money sink that makes automating these manual tasks less attractive in the short to medium term

From what I’ve seen with the current technology, those costs and risks far outweigh any benefits for the companies, and our jobs (and patients) are safe for now. Whether that continues to be the case in the coming years remains to be seen…

However, if AI experts like Gary Marcus are correct, any successful AI path forward will not come from statistical “guessing” machines like LLMs or deep-learning approaches—it will require alternative methods that can truly learn and apply fundamental rules and concepts like humans do.

I still believe in the optimistic future where AI helps doctors improve their patient care without negative impacts on the economy or risking lives. It will require all of us to stay current with this technology and be honest about both the potential benefits and limitations as it evolves.

I’m very curious what pathology and radiology will look like by the end up my career.

I think this is a great post, also applies to primary care. I wrote, and then deleted a post because it was too wonky, basically taking a complicated patient history and feeding it into ChatGPT to see how they would manage a chronic care visit for a patient with like 20 medical problems. It was pretty terrible. I hope to use ChatGPT with challenging diagnoses to give me more ideas, and perhaps be a personal assistant of sorts, but in terms of juggling diagnoses, seeing the whole person/animal, translating and counseling regarding treatment options, and just being a decent, compassionate human being, I think AI will be a partner not a captain for at least the next 30-40 years

What I love about this article is how well thought out and full of critical thinking it is. That’s something AI cannot realistically do YET, although I’m sure it’s around the corner. Humans still haven’t seemed to learn that shortcuts always mean more work!

My biggest AI fear is that some of the, shall we say, lazier youngsters (or perhaps just ignorant to critical thinking) will rely on AI far too much. Thankfully the majority of my surgeries are behind me - but I will say this...human and robot did my last very difficult abdominal surgery (Sugarbaker peristomal hernia repair with DaVinci), and severe pain and recovery was a lot easier and faster!