Falsely Accused of Using AI

Welcome to the new witch hunt

Dear Readers,

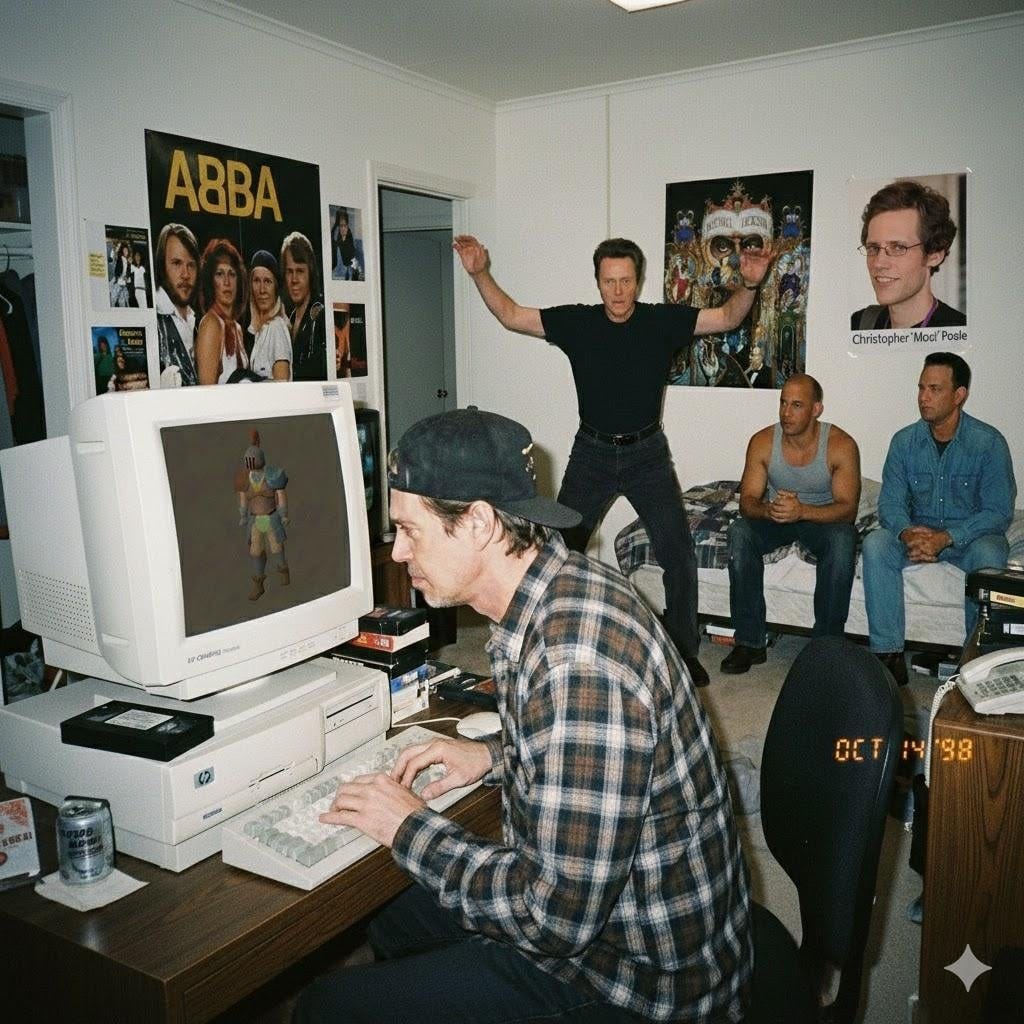

Today I had a very unsettling interaction online. It started when drinking my morning coffee and reading about the recent Hollywood dust-up where Quentin Tarantino slammed actor Paul Dano. A follow-up Google search revealed something peculiar: The AI summary got Tarantino’s statements completely backwards! Look at the screenshot below and compare to his actual quote:

“[Dano] is weak sauce, man. He is the weak sister. [Daniel Day-Lewis] is eating him [alive]. Austin Butler would have been wonderful in that role. [Dano’s] just such a weak, weak, uninteresting guy,” Tarantino said, per Variety. “Daniel Day-Lewis shows that he doesn’t need a strong foil. The movie needs it. He doesn’t need anything. It’s supposed to be a two-hander and it’s not! … you put him with the the weakest F*&^ing actor in SAG? The limpest dick in the world?”

Now, you might be saying to yourself, “Eric, you have too much free time on your hands,” or “Why do you care what movie stars say about each other?” Fair enough! However, I do think it provides a great example of several problems intrinsic to large language model chatbot summaries. First, and most obviously, it shows how poor their accuracy can be. Second, because Google is the most widely used search engine in the world, and these AI summaries are placed prominently at the top of the page, many are going to simply take whatever they say for granted. It’s bad enough that people increasingly just skim headlines instead of reading articles, but now we have to deal with blatant falsehoods injected into our search results.

“AI derangement syndrome”

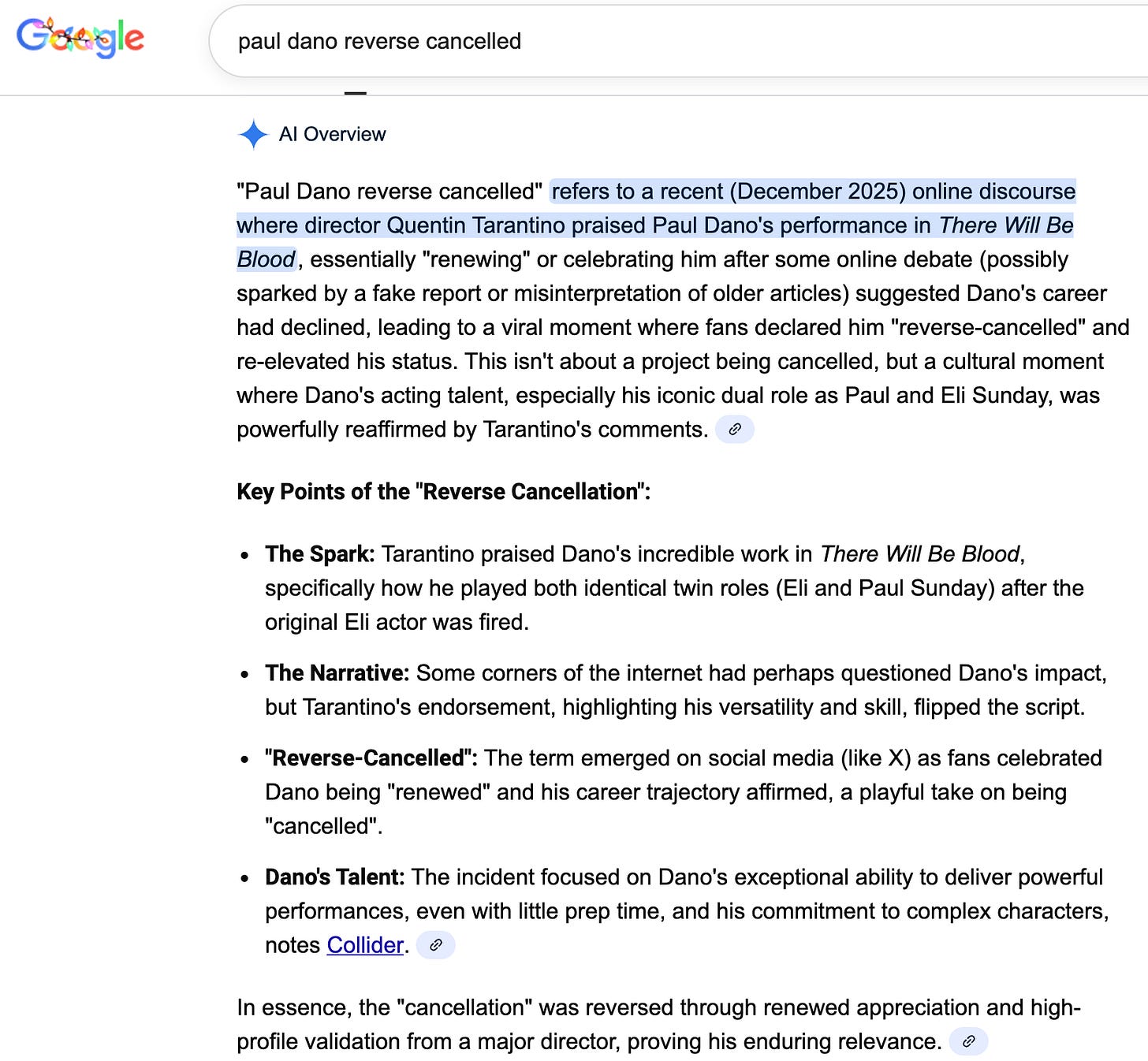

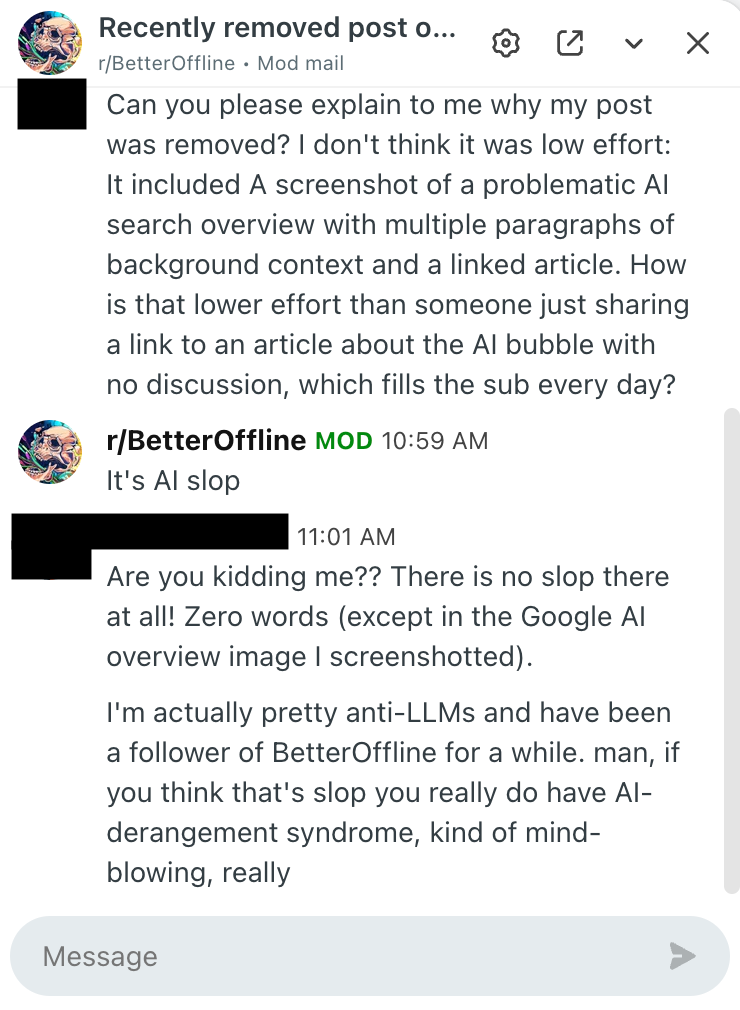

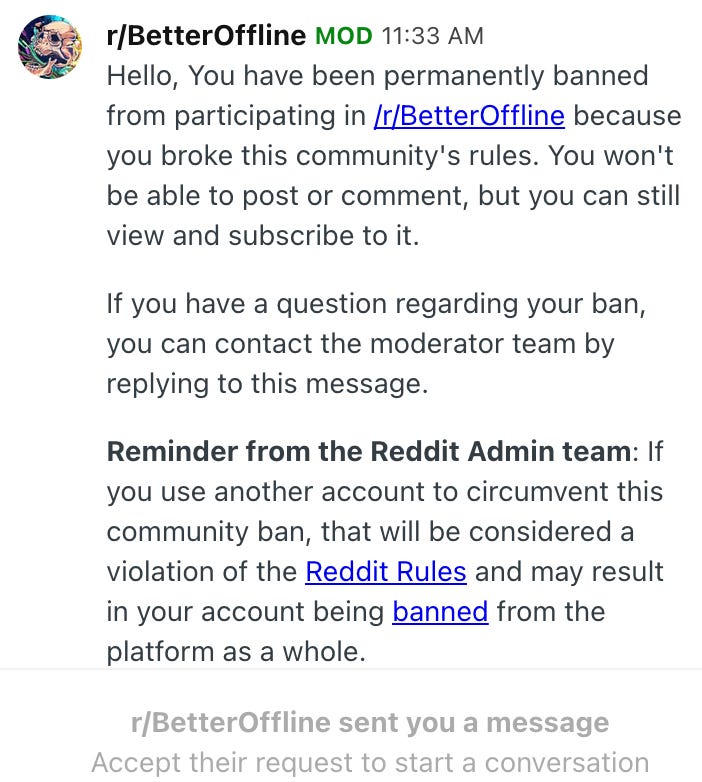

That’s actually just the set-up for the negative interaction. I went to post my experience on a subreddit called r/BetterOffline. I thought that community might be interested because they are highly critical of AI and frequently discuss issues related to ChatGPT, Gemini, and other LLMs. Much to my surprise, my post was immediately deleted by the moderators.

When I messaged them to ask why it was taken down, they told me the post was “AI slop”!!

The conversation got nastier from there, with the moderator ultimately snapping “You have 23 community karma, stop pretending like you’ve contributed a single thing to this place. It’ll be a better community without you.”

I was stunned: WTF just happened? How did sharing a post about the problems of AI slop get labeled as slop itself??

Some people clearly loathe these chatbots so much that they see it lurking in every shadow, and can’t wait to tear down anyone they suspect of using ChatGPT (even if they didn’t). Commenters on the internet have dubbed this behavior “AI Derangement Syndrome” or “Anti-AI Psychosis.”

The new witch hunt

As is my wont, I think there are some broader lessons to be found in this anecdote about anonymous maniacs on the internet. I’m by no means the only person to be caught in the cross-hairs of the AI witch hunt. Every day on Substack I see some pile-on attacking a viral article as “obvious slop”; having people say the same about me when it was 100% false makes me certain a lot of them are baseless, too.

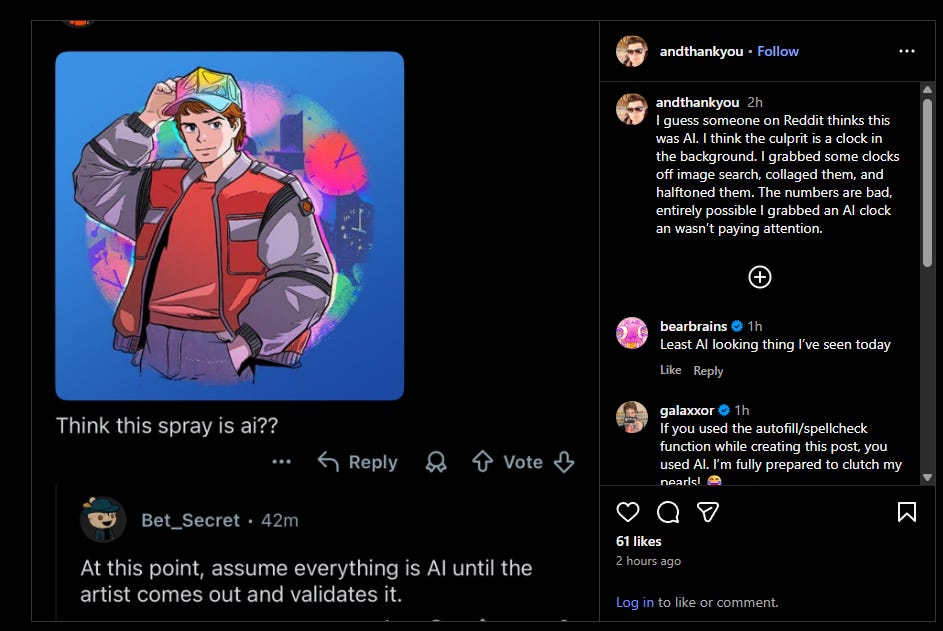

Recently, fans of the online battle royale game Fortnite blew-up over claims that graphics in the game were generated with AI tools, such as this one:

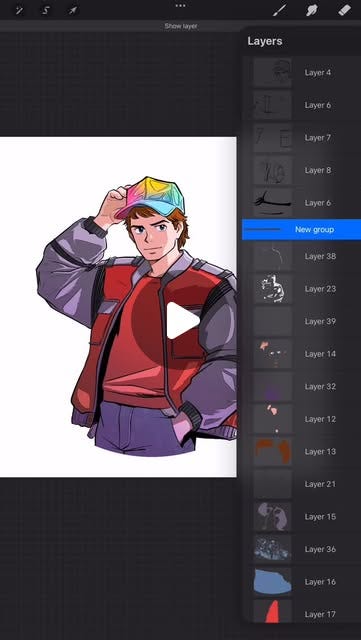

Believe it or not, the graphic designer who created that decal received hate mail and even threats from angry gamers! Sean Dove finally debunked it by showing a time lapse video of him creating the illustration manually, layer by layer:

Similar accusations have been lobbed at students for cheating on homework and exams, with often dire consequences ranging from failing grades to losing a scholarship. While it’s certainly true that many students have been using ChatGPT and similar tools to do their assignments, it is absolutely not universal, and the allegations are often based on very flawed “AI detection” software or crude heuristic “tells.”

The madness has gotten to the point where any writing that uses an em-dash or lacks typos is assumed to be robot copy! Sorry, Emily Dickinson, I guess you were just an early pioneer of “slop” in the 1800s 🙄

This creates a perverse situation where some of the sharpest students will be accused of cheating due to their high-quality work, while weaker ones can potentially use chatbots to cheat and take steps to cover their tracks (like introducing minor errors and tweaks to throw off AI detectors).

A future where everything is in doubt

All of this really bums me out. This climate of finger-pointing creates a no-win scenario, where whether or not you choose to use LLMs (in either a minimal or heavier capacity), angry mobs may attack you.

I spent this week working on two different pieces for All Science, and ended up publishing neither. The drafts were meandering, I couldn’t get the tone or the closing points right. Was I tempted to use ChatGPT to help me through the writer’s block? Sure was! However, I refused because not only do I take great pride in my writing, but I believe that the best pieces come from struggling with a topic until you find the perfect angle. And yet sharing some original writing about the pitfalls of LLMs still got me on the receiving end of pitchforks!

This problem goes beyond the hurt feelings of individual artists. Consider generative AI tools to create life-like images and even videos. The technology has come a long way since the six-fingered hands and uncanny lighting that gave away DALL-E images immediately. Google’s recently released Nano Banana Pro1 model produces scarily-accurate images like these:

We are not prepared for a world where anything we see online can potentially be a computer-generated deep-fake. This will rapidly erode our already fragile sense of shared reality, and the ubiquity of synthetic media will actually enable bad behavior, because people can now say any photographic evidence of wrongdoing is plausibly an AI hoax.

This is what I think the anti-AI witch hunt is really about: Fear of a rapidly-approaching future where you can never be 100% certain something you didn’t witness in person is true. The original artist is often an incidental casualty.

How do we cope?

Verifying anything important with trusted sources rather than relying on anonymous accounts on social media will become more important than ever. We may be able to make progress with better watermarks and forensic tools to confirm originality of content, although this will require the tech companies’ collaboration. Individual writers, musicians, and graphic artists might want to practice “defensive creativity” by documenting intermediate steps that show their work, like the Fortnite illustrator Sean Dove.

It’s ultimately going to take years of society getting used to these tools for us to adapt and find a healthy-ish equilibrium. In the mean time, you can help by not screaming at artists and shaming them for using AI (because they may very well be innocent).

—Eric

Yes, that is the real product name, and yes it is very stupid.

I recently got a comment on a Facebook post from someone I did not know. I thought the comment read like it was AI-generated. Later, I received a friend request from that person, which I ignored, because, obvious bot.

Today, I picked up a lady hitchhiking (common where I live) who I recognized, but whose name I didn't know despite the fact that we've chatted at a variety of social events.

"Oh, hi," she said as she buckled up. "I sent you FB friend request the other day but maybe you haven't seen it yet...?"

So. I feel this. And she (a real live person whom I know, not a bot!!) and I are Facebook friends now.

If you think AI is influential now, just wait until the 2026 elections.