Weekend Roundup: The Scientific Credibility Gap

How scientists and doctors struggle to place data in context and communicate uncertainty

Trust in the scientific process is critical for society to ensure optimal public health, funding research and development, and support for policies to combat existential threats like climate change. Unfortunately, a recent Pew Research study found decreasing support for experts over the course of the last few years:

While there are likely many factors contributing to this phenomenon, including political polarization and misinformation/disinformation (often turbo-charged by social media algorithms), let’s face it—A lot of doctors and scientists are terrible at communication! I myself have been guilty of the cardinal sins in the past:

Too much jargon

Overcomplicating the story

Difficulty saying “I don’t know”

In today’s weekend round up, I’m going to discuss some examples of good and bad science communication, and talk about ways the medical and scientific communities can rebuild faith in their work.

It Matters Who Does Science

One way to foster public confidence is to make sure researchers are as representative as society itself. Holden Thorp, the editor-in-chief of Science, has a great recent article on his Substack Science Forever that provides an eloquent defense of the need for diverse perspectives in science:

“Scientific research is a social process that occurs over time with many minds contributing. But the public has been taught that scientific insight occurs when old white guys with facial hair get hit on the head with an apple or go running out of bathtubs shouting “Eureka!” That’s not how it works, and it never has been. Rather, scientists work in teams, and those teams share findings with other scientists who often disagree, and then make more refinements. Then those findings are placed in the scientific record for even more scientists to examine and produce further adjustments. Eventually, theories become knowledge. All along the way, these scientists are conspicuously and magnificently human—with all the assets and flaws that humans possess. And that means that who those individuals are, and the backgrounds they bring to their work, have a profound influence on the quality of the end result.

…

The soundbite “trust the science” has been circulating recently. This framing is unfortunate. Because “the science” in this context is usually a snapshot of ideas or facts in a particular moment—and often from the perspective of a small number of people (or even one person). It would have been better to use a phrase like “trust the scientific process,” which would imply that science is what we know now, the product of the work of many people over time, and principles that have reached consensus in the scientific community through established processes of peer review and transparent disclosure.”

Check it out here:

Communicating Uncertainty and Shifting Knowledge

“First, we guess.”

This is how the theoretical physicist Richard Feynman began his lecture on the scientific method. Science is a process of discovery, not a set of finalized facts carved in stone. It begins with questions and uncertainty, and is ever-changing. Researchers think of a gap in our knowledge, then formulate a hypothesis. For example:

“Are patients with LDL cholesterol more likely to have heart attacks?”

Then, they gather data and conduct experiments to test that question against the “null hypothesis,” the possibility that there is actually no difference in heart attacks between patients based on cholesterol levels. When they get their results, they share it with the scientific community in conferences and publications, inviting feedback on whether people think the information is reliable or not. There is a lot of additional nuance and complexity that goes into the scientific method, but that is the core of it.

The next step is critical: Other scientists and doctors ask the question again and try to disprove the original results. Some will ask slight variations on the question. Others will try to do more controlled experiments in test tubes with cells or other model systems to understand why patients with high LDL cholesterol have heart attacks. Still others will use what we learn from those studies to formulate new questions.

All of this means that what we know is constantly shifting and being challenged. Information and hypotheses that stand the test of time and constant scrutiny become Theory (scientific parlance for a widely accepted explanation, such as the Theory of Gravity and Germ Theory). This can understandably be confusing to watch from the outside, and scientists have not done a good job of explaining it.

The effects of communicating uncertainty on public trust in facts and numbers

Scientists have especially been reluctant to point out when the data we have is ambiguous or conflicts with other facts. Our human ego makes it innately hard to say “I don’t know.” Furthermore, many people fear that putting a spotlight on uncertainty will decrease the credibility of scientists.

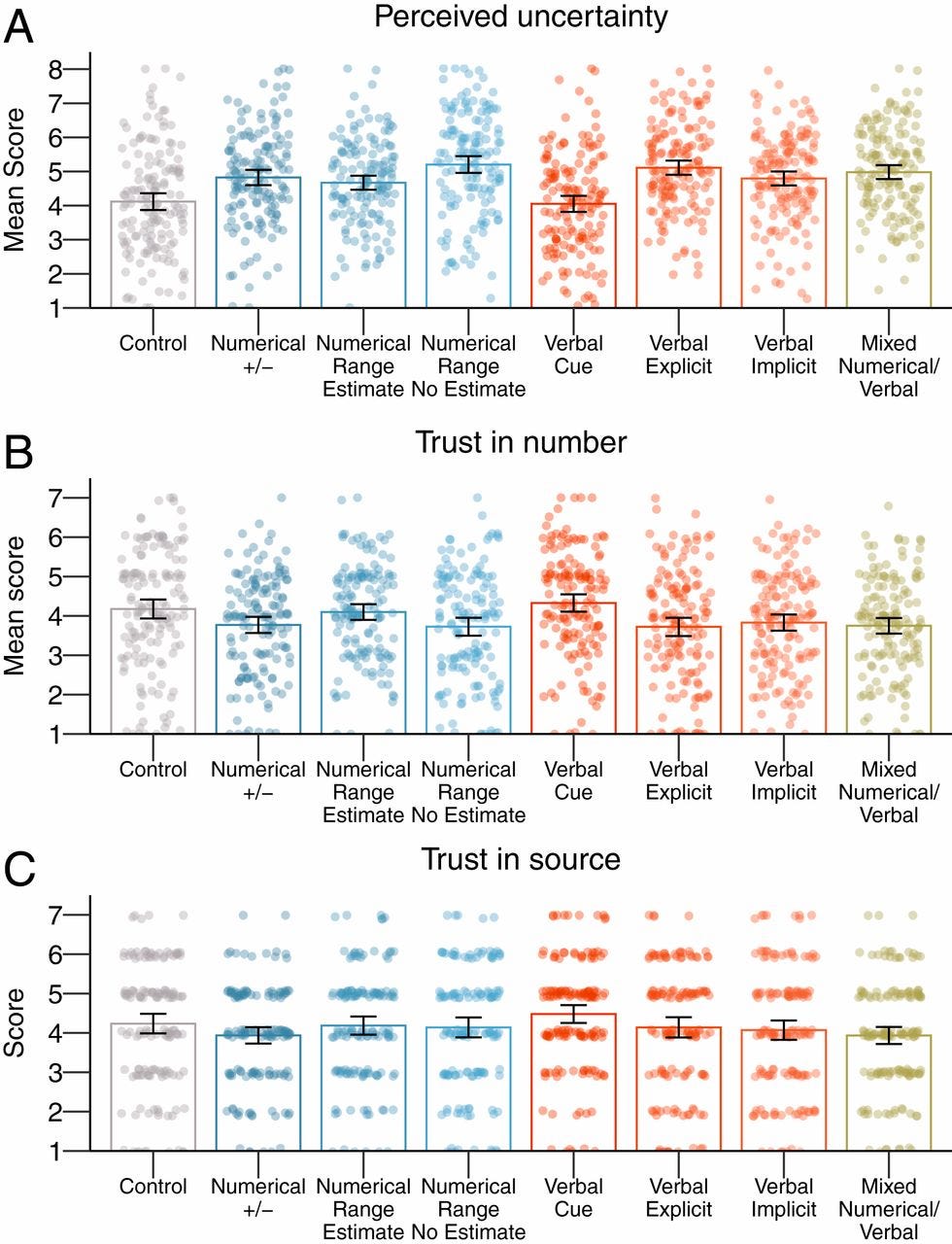

A 2020 study in the Proceedings of the National Academies of Sciences (PNAS) conducted five experiments to see if relaying uncertainty (in both verbal and quantitative terms) about a variety of reports about controversial issues like climate change and immigration decreased the participants’ trust in the source.

Over the course of the experiments they found that relaying the uncertainty had little to no impact on the assessment of source credibility. This is good news for scientists and journalists who worry that providing this context will promote distrust. Long-term, more transparency may ultimately improve public confidence in the scientific process. (Of course, that is merely a hypothesis that needs to be tested!)

“The median isn’t the message”: How to communicate the uncertainties of survival prognoses to cancer patients in a realistic and hopeful way

The title of this 2019 oncology study takes inspiration from the famous Stephen Jay Gould essay of the same name following his diagnosis with mesothelioma at a young age (he died 20 years later of a second, unrelated tumor). All clinicians, but especially oncologists, face the challenge of telling an individual patient how long they may live with a given disease. While we can quote studies on the median survival times of thousands of patients, how long any specific person will live is completely unknowable.

This study investigates approaches to communicating prognosis to patients newly diagnosed with cancer. It shows that providing context on the variability of the numbers and the inherent uncertainty in prognostication is preferred by patients and leads to more accurate understanding.

From the abstract:

“This study investigated how doctors communicate the uncertainties of survival prognoses to patients recently diagnosed with life‐threatening cancer, and suggests ways to improve this communication. Two hundred thirty‐eight Norwegian oncologists and general practitioners (GPs) participated in Study 1. The study included both a scenario and a survey. The scenario asked participants to respond to a hypothetical patient who wanted to know how long (s)he could be expected to live. There were marked differences in responses within both groups, but few differences between the GPs and oncologists. There was a strong reluctance among doctors to provide patients with a prognosis. Even when they were presented with a statistically well‐founded right‐skewed survival curve, only a small minority provided hope by communicating the variation in survival time. In Study 2,177 healthy students rated their preferences for different ways of receiving information regarding the uncertainty of a survival prognosis. Participants who received an explicitly described right‐skewed survival curve believed that they would feel more hopeful. These participants also obtained a more realistic understanding of the variation in survival than those who did not receive this information. Based on the findings of the two studies and on extant psychological research, the author suggests much‐needed guidelines for communicating survival prognoses in a realistic and optimistic way to patients recently diagnosed with life‐threatening cancer. In particular, the guidelines emphasise that the doctor explains the often strongly right‐skewed variation in survival time, and thereby providing the patient with realistic hope”

Likelihood of neoplasia for diagnoses modified by probability terms in canine and feline lymph node cytology: How probable is probable?

One of the focuses of this newsletter is grappling with the uncertainty and doubt inherent in medicine. As a pathologist, my contact with patients is indirect; I review laboratory data and slides of cells and blood under the microscope. My immediate “clients” are not the pet owners, but the referring veterinarians they are seeing. Sometimes my reports will end up in the hands of the pet owners themselves. Other times, they will be forwarded to consulting specialists who will comb over the medical history (and critique anything they think might be wrong). This cascade of different audiences means my reports must walk a tightrope: It must of course contain the best diagnosis I can provide, but I also have to “show my work” so that the referring veterinarians providing care understand why I made the assessment I did, and it must be clear even to general practitioners who are not steeped in the arcane language of pathology. Sometimes I need to convey delicate messages about prognosis or errors between the lines without upsetting the client or making them lose faith in their vet. In essence, a pathologist is a translator between the cellular world and the everyday world where a worried pet owner just wants to know “what’s wrong with my dog, and will he be OK?”

One frustration I hear from vets a lot is that they wish pathologists would just “say what they think it is.” When a diagnosis is clear under the microscope this is not a problem. For example, if I see a fungal organism like Cryptococcus…that’s the diagnosis, no beating around the bush. However, sometimes the diagnosis is ambiguous and we’re limited in how confident we can be. We may couch our interpretation in hedged terms like “probable carcinoma,” “possible lymphoma,” or “can’t rule out a sarcoma.” Other times we will provide the dreaded SEE COMMENTS, and wax poetic about our speculative theories and the reasons for our uncertainty. This is illustrated with the old adage in medicine:

“The length of the comment is inversely proportional to the confidence of the pathologist”

This 2018 study out of UC Davis is one of several recent papers that addresses this issue. It evaluated 367 lymph node biopsy reports and evaluated how often the diagnoses were “definitive” versus couched in probabilistic terms (i.e. “possible” or “suspicious”).

What they found was quite interesting; in effect, the language used was associated with different diagnostic performance. When the diagnoses were unmodified (or “definitive”), there was higher specificity, meaning fewer false positives, but some diagnoses were missed. When pathologists used probability modifiers, there was higher sensitivity, but some false alarms were raised. This suggests that when a veterinarian asks “Why can’t you just tell me what you think it is?”, the real question would be: “What do want my sensitivity and specificity to be?”

The Curious Side Effects of Medical Transparency

On the other side of the transparency debate, Dr. Danielle Ofri’s recent New Yorker article asks: How frank and direct should doctors be in their medical records, and what are the downstream consequences? What are the risks to patients’ anxiety if they can see the full, messy diagnostic thought process without the requisite context?

“One afternoon not long ago, I sat entering notes into a patient’s medical record. She was in her forties, and her labs showed anemia. The causes of anemia range from menstruation to cancer, and so pinpointing the correct underlying diagnosis is critical. Physicians are trained to formulate a full roster of possibilities, known as the differential diagnosis, and then to work down the list systematically. We’re taught to cast a wide net—celiac disease, parasitic infections, thalassemia, lead poisoning, liver disease, B12 deficiency, myeloma, sickle-cell disease, G6PD deficiency—because you’ll never make a diagnosis if you haven’t included it in your differential.

But I hesitated before entering my differential into the computer system. Should I include the more serious possibilities, even though they were much less likely? In the past, I wouldn’t have thought twice about it, as the chart served primarily as a tool for the medical team to communicate among ourselves. But a new law, the 21st Century Cures Act, had recently been fully implemented, making medical records open to patients by default, in real time, including doctors’ notes. My in-box was already jammed with panicked messages from people convinced that they had catastrophic illnesses, based on minuscule lab discrepancies and panic-inducing Google searches. How would my patient react to seeing my ruminations about possible colon cancer or duodenal ulcer in the note?”

…

From my end of the stethoscope, it’s always seemed obvious that patients should own their medical records, and be able to see them. But openness can be challenging in practice. When our hospital initially rolled out its patient portal, a few of my older patients asked that I remove references to erectile dysfunction from their medical records. Their adult children handled the household tech, they explained, and my patients preferred to keep their Viagra prescriptions private. In other cases, multiple family members can access a patient’s chart, messaging me about test results and treatment plans, and it can be complicated to deduce the hierarchy of responsibility. Advocates have raised increasing concerns about the ease with which abusers can gain access to victims’ medical records; health-care settings have traditionally been secure places for people experiencing domestic violence, elder abuse, and human trafficking, but, with medical records becoming more accessible, patients may feel less certain that their words are safe.”

From the narrow framing of her own clinical experiences, she zooms out to consider the historical context of harms caused by keeping patients in the dark about their medical conditions, alongside examples of how previous transparency laws have had mixed results or backfired:

“Historically, the medical profession has had little use for transparency. Grave diagnoses were routinely withheld, on the assumption that they would further patient suffering. The Black men who participated in the infamous syphilis study at Tuskegee, in 1932, were not told that the trial aimed to study untreated syphilis, nor were they made aware of—or offered—penicillin, which became widely available the following decade. Generations of patients with mental illness were often institutionalized with little or no information released to them or their families.

There are strong ethical reasons, therefore, to pursue transparency in the medical record. But, as Pozen points out, we should not be lulled into treating transparency as a first-order good, like compassion, respect, avoiding harm, or putting the patient first. In a recent survey of more than eight thousand patients conducted by OpenNotes, nearly all the respondents said that they preferred immediate access to their test results, even if their doctors hadn’t yet reviewed those results. This was true even for the vast majority of people who said that they’d experienced increased worry in the face of results that were abnormal. It’s an understandable preference—one that every patient has the right to hold. But simply throwing open the medical record and calling it a day allows us to rest on our laurels without doing the hard work of fixing what’s inside. Police departments often point to body cameras as evidence of accountability without actually addressing the problem of police violence. Lawmakers can laud themselves for their transparency via C-span without having to engage in the gritty compromise needed to move legislation forward. Transparency might better be viewed as one possible means to desirable ends—not an end in and of itself.”

Don’t Look Up

This Netflix movie is nominally about about an asteroid hurtling towards earth and the scientists trying to warn folks to act to prevent calamity. However, the subtext is actually a pitch black satire of our collective paralysis over climate change. In a bit of meta coincidence, the movie came out in the middle of the covid-19 pandemic, and much of the same themes applied.

Fingers are pointed in every direction. Scientists who try to inform the public about their work but become seduced by money and celebrity status. Sexism that disregards women in science. Shallow TV hosts who care more about ratings than misinformation. Citizens who value entertainment over discussing anything serious.

It’s bleak, but also frequently hilarious. The movie offers plenty of examples of what not to do in science communication, although it does not provide much in the way of solutions.

Randall Munroe of xkcd Takes Silly Scientific Questions Seriously

If there is one takeaway from “Don’t Look Up,” it’s probably that for tough topics to permeate into the general public's consciousness it has to be entertaining (David Foster Wallace would probably agree). Enter Randall Munroe, the former NASA robotics engineer who writes the webcomic xkcd. In fact, the lede graphic for today’s article is from his site.

Munroe describes xkcd as “a webcomic of romance, sarcasm, math, and language,” but this fails to grasp the breadth of subjects he makes comedy out of, which often includes everything from theoretical physics to oncology (his partner recently battled breast cancer).

In the last few years he has brought his artwork and knack for explaining difficult technical concepts to several books for the general public. In Thing Explainer, he provides funny and relatable explanations for everything from how airplanes fly to the laws of the solar system and periodic table of elements using only the 1,000 most common English words. In What If? (and now its sequel1 he takes seriously the kind of absurd questions only a child could ask like “Could you drop a humongous ice cube into the ocean from space to cool the water?” and “Could you create a jetpack from a bunch of machine guns firing downward?” and uses them as jumping off points to explain the underlying scientific principles.

Bonus: The Dunning-Kruger Effect, illustrated

While I’m discussing nerdy comics and graph-based humor, I would be remiss not to also recommend Saturday Morning Breakfast Cereal (or SMBC for short). Fair warning—It often includes crass and off-color humor. This comic below succinctly captures why it can be so hard to have productive conversations about anything controversial, whether political or not:

Reminder: I don’t get any commission or other benefit for links on this Substack; they are just for reader convenience. I generally try to link to the main publisher page, but sometimes the pages are for e-stores.

There’s a disparity in understanding that isn’t easy to resolve between the “experts” (for want of a better term) and the public. The issue is how to create a pact between people who arrived at conclusions through years of training, specialty, and rigourous inquiry, and those whose understanding is limited by a lack of those things, by design or accident.

I take as a given that, as a general rule, those who traffic in knowledge would find it beneficial to syndicate as much of it as possible (save instances of sociopathy, which are comparatively rare). However, specialty in knowledge from a purely altruistic standpoint is still rare relative to those who want to abominate for personal gain - the snake-oil merchants and con artists.

What makes it worse now - by possibly an exponential function - is the combination of the almost universal access to means of communication, the almost free avail of this access, and the ability to do it under cover of anonymity. Given a receptive and relatively naive population, you have the oxygen-fuel-ignition trifecta of a conflagration that is very difficult to stifle.

I can see this frustration among the scientists and scholars who rightfully find this to be a gross injustice, some of which might contend that standing up for knowledge is an exercise in futility or simply not their business. I can’t blame anyone in that camp, but it won’t change the situation. I find it frustrating myself. It was bad enough being a skeptic when the internet was still specialized to dedicated conspiracy theorists; smartphones and wi-fi have created a swath of even casual conspiracy theorists.

All is not lost, however. In a practical sense, much of it is still just bluster, even when media introduce simplistic, reductive narratives that all but blatantly indicate that the intent is to indoctrinate and not inform. Most of those who buy into unscientific ideas that fly in the face of empirical fact - numerous though they may be - are verbal hypocrites with next to no influence (save spreading rumours). As sordid as that may seem, these characteristics means they’re not acting on their ideals; they’re just a positional “club good” that maintains the air of approbation among their peers.

Granted, this doesn’t mean scientists and public intellectuals should throw up a white flag; quite the contrary. But it does mean it may be prudent to jettison the ideal for a highly-informed populace for fear it could lead to rout and chaos. I doubt it will for two reasons: one, chaos and conflict are tiresome, and people will only put up with it to a point. And two, as Steven Pinker has amply demonstrated, all metrics of standard of living show a steady global increase on average. I would also add that much of the more pernicious forms of pseudoscience - bloodletting, trepanation, pressings for witchcraft, executions for heresy, carpetbagging, race-based phrenology - are, in general, things of the distant past. Many of the ones we deal with now that persist like religion and astrology are, if you take it globally, of comparatively little consequence (even if you account for terrorism), and the ones that are widely pervasive - conspiracy theories like 9/11 Truth, for instance - are often flash-in-the-pan.

I don’t think scientists should fret too awful much about the influence of knowledge, because if you take any single slice of history, it seems like the influence has been minimal. But the efforts are not in vain. Anyone can name any number of scientists whose influence was sufficient to make them prominent names, like Galileo or Einstein, and if we’ve gone in one century from the germ theory of disease to simulating human intelligence through automation, putting humans on the Moon, cracking the genome, syndicating the internet, and ensuring that some 95% of the population is basically literate, I’ll call that a victory.

Yes, the movie Don't Look Up was brilliant, and very creative, I've watched it a number of times. It keeps getting better with each viewing, imho.

I do have one serious complaint however. Everyone says the movie is a commentary on our relationship with climate change. Ok, that sounds reasonable, and if true the movie does a good job. But...

Climate change is not the existential threat that we are ignoring in a Don't Look Up manner. Nothing compares to the threat presented by nuclear weapons, and it seems no where is denial disease stronger than on that subject. If Don't Look Up is about climate change, and the producers forgot about nuclear weapons, the irony is just too painful.

Here's the first thing I did on Substack. There is zero interest.

https://www.tannytalk.com/s/nukes